Automatic tuning of parameters¶

Introduction¶

What follows is the abstract of [Sheik et al., 2010].

In the past recent years several research groups have proposed neuromorphic devices that implement event-based sensors or bio-physically realistic networks of spiking neurons. It has been argued that these devices can be used to build event-based systems, for solving real-world applications in real-time, with efficiencies and robustness that cannot be achieved with conventional computing technologies.

In order to implement complex event-based neuromorphic systems it is necessary to interface the neuromorphic sensors and devices among each other, to robotic platforms, and to workstations (e.g. for data-logging and analysis). This apparently simple goal requires painstaking work that spans multiple levels of complexity and disciplines: from the custom layout of microelectronic circuits and asynchronous printed circuit boards, to the development of object oriented classes and methods in software; from electrical engineering and physics for analog/digital circuit design to neuroscience and computer science for neural computation and spike-based learning methods.

Within this context, we present a framework we developed to simplify the configuration of multi-chip neuromorphic systems, and automate the mapping of neural network model parameters to neuromorphic circuit bias values.

- cost function

- recurrent structure

- modularity

A block-box approach¶

Many academic groups around the world had already presented their work on the development of general tools for the control of neuromorphic systems that are system independent. The package pyNN (pron. pine) is one of the most prominent examples.

Most of these packages where developed with a strong focus on software simulators, which means the one can write pyNN code which is simulator independent and then choose which simulator to perform all internal operations such as integration of differential equations, communication between neurons and so on. The structure is more or less common to all software simulators: first prepare the parameters and the run the simulations, usually calling a run functions. This structure is not compatible with real-time hardware, in which the computation is always being performed and a real-time interaction with the system (e.g. monitor, control, stimulate) is needed by the user at any time.

One of the reason for the independent development of pyTune is its strong focus on real-time interactive control of general-purpose systems. For example, with the pyNCS plugin of pyTune, every action the user make, e.g. setting biases, has an immediate effect. As a consequence, the entire package does not depend on a one-time-run-like action, which makes the integration with pyNCS seamless.

Most importantly, parameters of VLSI hardware systems are usually very hard to configure because of the non-idealities of the hardware itself. This is obviously not a problem with software simulators. Tools like pyNN, which where first developed for software simulators and then adapted to interface with specific hardware systems, don’t contemplate this problem and rely on a preliminary calibration to infere the system’s hidden non-idealities and be able to set – offline – parameters such as time constants and so on through a set of pre-defined equations. With these equations, setting the system parameters resolves in a more or less one-to-one parameter translation which is easy and fast.

In addition, calibration procedures can be very time consuming and the high number of parameters can make them unpractical. Parameters like dark currents of transistors in a VLSI circuit are also strongly dependent on the conditions of the environment. For example, even small changes in temperature can affect their values. As a consequence, multiple calibration procedures needs to be performed in order to have the right estimation of these parameters when the environment changes, an aspect that makes this approach even more inefficient for hardware which is supposed to work on, for example, robotic platforms.

With these considerations in mind we decided to give pyTune the possibility to directly measure the quantities of interest. This approach has the obvious drawback of a slower interaction with the system because quantities cannot be simply translated but have to be directly measured through complex procedures. However, it has the advantage of a pure black-box approach, which means that the a complete description of the system is not needed and can be inferred. This aspect is the one that gives pyTune the flexibility that real-time hardware-based neuromorphic systems require.

Finally, since hardware-based neuromorphic system are still lacking of a structure which can be adapted by many groups around the world, every piece of software that controls them is very hardware dependent. With pyTune we wanted to give the possibility to write plugins for every neuromorphic system with minimal effort.

In conclusion, pyTune can be easily interfaced to hardware and software systems by providing informations for the direct measure of parameters of interest.

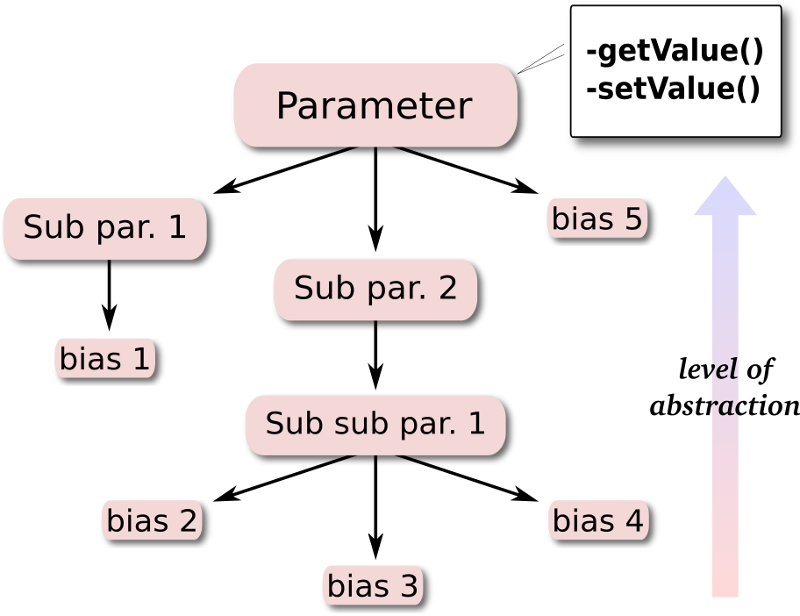

Dependency tree¶

The pyTune tool-set relies on the translation of the problem into parameter dependencies. The user defines each parameter by its measurement routine (in the form of a getValue function) and its sub-parameters dependencies. At the lowest level, the parameters are defined only by their interaction with the hardware, i.e. they represent biases of the circuits. The user can choose a minimization algorithm from those available in the package or can define custom methods, to do the optimization that sets the parameters value. Optionally one can also define a specific cost function, that needs to be minimized. By default, the cost function is computed as where is the current measured value of the parameter and :raw-lates:`$p_{desired}$` is the desired value. Explicit options (such as maximum tolerance for the desired value, maximum number of iteration steps, etc.) can also be passed as arguments to the optimization function.

Finally, the sub-parameters’ methods are mapped by the appropriate plug-in onto the corresponding driver-calls, in the case of an hardware system, or onto method calls and variables in the case of a system simulated in software. Each mapping specific to a system has to be separately implemented and included in pyTune as a plug-in.

Step-by-step example¶

Here we report an example script that uses pyTune to tune a parameter. In this example we will try to set the output frequency of a population of leaky-integrate-and-fire neurons on a chip by injecting a constant current to all the neurons. The rate at which the neurons fire depends on the amount of current we inject through the injection_bias bias and the leak of the neuron, which we can control through the leak_bias bias.

Let’s write the code for it. Some import:

import logging

import logging.config

import numpy as np

import pyNCS

from pyTune.plugins.ncs import *

setup = pyNCS.NeuroSetup('/usr/share/ncs/setupfiles/mc_setuptype.xml',

'/usr/share/ncs/setupfiles/mc.xml')

setup.chips['ifslwta'].loadBiases('biases/Biases_ifslwta')

pop = pyNCS.Population('pop','Population of neurons')

pop.populate_by_number(setup, 'ifslwta', 'excitatory', 5)

Now the parameter definition part. Every parameter can usually be created by calling its class with only description string arguments:

rate_param = Rate(paramid='rate')

At this point the parameter is an empty class. We need to tell the system which part of the system this parameter belongs to. This is done with a specific set_context function of each parameter. This function is like an initialization function and obviously each parameter accepts different arguments. For example, the Rate parameter wants a pyNCS.Population as argument:

rate_param.setContext(pop)

After the declaration, the system has automatically created all the parameters on which the rate depends on. Now, the reader should keep in mind that a parameter is nothing more than a variable in the RAM of our computer until with set its context, as we mentioned earlier. This means that also the injection bias and the leak bias have to be initialized. Just by chance, also these two accept a population as argument:

rate_param.parameters['injection_bias'].setContext(pop)

rate_param.parameters['leak_bias'].setContext(pop)

Now we can set some starting value for the biases:

rate_param.parameters['injection_bias'].setValue(2.71)

rate_param.parameters['leak_bias'].setValue(0.16)

Every parameter has a getValue and setValue function. The leak and the injection are two special parameters because they don’t depend on anything, they basically are two biases of the chip, while in general each parameter (parent) depends on some other parameters (children). When we want to set a parent, the system automatically follows its children and modifies them until the parent reaches the value that we wanted to set.

The way the system wanders the dependency tree is specified by a method which can be specified as an argument to the call of a set_value function. The methods we can use to search in the parameter space accept a searchparams python dictionary. The latter collects informations that are specific for the method we use. For example, if we use a linear-step search, we have to choose the size of the step.

In this example we use a linear search. We have to create the searchparams dictionary if we don’t want to use default values:

v = 50.0 # the value we want to set, in Hz

delta = .1 * expected # tolerance to 10% of the expected value

searchparams= {'rate':{

'tolerance':delta, # stop until rate is in the

# range [v-delta,v+delta]

},

'injection_bias':{

'max':2.9, # we can limit the parameter space

'min':2.7,

'step':0.0025,

},

'leak_bias':{

'max':0.2,

'min':0.14,

},

#'step':0.005}, # here we use a default value

}

Now we are ready!

x = rate_param.setValue(v, searchparams)

if x<(v-delta) or x>(v+delta):

raise Error, "pyTune doesn't work..."

Implementation of new parameters¶

With pyTune it is possible to control every parameter of a system independently. Each parameter has to be described in a couple of specific files that the engine will use to properly handle it. Since pyTune relies on direct measure of parameters, there will be one file that explicitly implements the way the system can measure one parameter. Technically, it consists of a Python Class. A second file contains the informations about the dependencies of the parameter on other parameters of the system.

In what follows we show how to implement a new parameter for the pyNCS system.

Note

Since it is the set of implemented parameters that constitutes a plugin for pyTune, the following sections can be use as a reference also to write new plugins.

The python file template¶

# -*- coding: utf-8 -*-

# HELLO,

# THIS IS A TEMPLATE FOR THE CREATION OF PARAMETERS IN THE NCS PLUGIN OF

# pyTune. PLEASE READ ALL THE STRINGS WRITTEN IN CAPITAL LETTERS AND FOLLOW THE

# DESCRIBED CONVENTIONS AS MUCH AS POSSIBLE.

from __future__ import with_statement

from os import path

from pyTune.base import Parameter

from pyAex import netClient

#from pyOsc import pyOsc

import numpy as np

# DEFINE SCOPE CHANNELS HERE #

#global scope

#scope = pyOsc.Oscilloscope( driver(debug=True) )

#scope.names( {

#0 : 'Vmem127',

#1 : 'Vsyn ("Vpls")',

#2 : 'Vpls ("Vsyn")',

#3 : 'VsynAER',

#4 : 'VplsAER',

#5 : 'Vk',

#6 : 'Vwstd',

#7 : 'Vmem (scanner)' })

class Template(Parameter): # REPLACE WITH NameOfYourParameter

'''

DESCRIBE PARAMETER HERE.

Units: "UNITS HERE"

'''

def __init__(self, paramid='Template', # if your parameter has a long name:

# PlesaUseThisFormat

xmlfilename='template.xml', # now instead, use_this_format

history_filename='template.history'): # and_also_here

'''

'''

# DON'T FORGET TO CREATE your_parameter.xml!

# PLEASE DON'T MODIFY THIS

resources = path.join(path.dirname(__file__), xmlfilename)

history_filename = path.join(path.dirname(__file__), history_filename)

Parameter.__init__(self, paramid, xml=resources, history_filename=history_filename)

def setContext(self, population, synapses_id):

"""

THIS SETS THE context OF A PARAMETER, I.E. POPULATION(S) ON WHICH IT

ACTS. PLEASE DESCRIBE ARGUMENTS HERE, AS SHOWN IN THE FOLLOWING

EXAMPLE.

Sets the context for the parameter. Arguments are:

- population = pyNCS.Population

- synapses_id = The string of the synapses (e.g. 'excitatory0')

"""

# DEFINE YOUR context HERE, FOR EXAMPLE:

self.neurons = population.soma

self.synapses = population.synapses[synapses_id]

# DON'T FORGET YOU CAN USE Population.neuronblock, IN WHICH YOU FIND

# ALL THE BIASES THAT YOU NEED. SEE EXAMPLE:

self.bias = population.neuronblock.synapses[synapses_id].biases['tau']

# THE SET OF FUNCTIONS STARTING WITH DOUBLE UNDERSCORE REPLACE THE ONES

# INHERITED FROM pyTune.Parameter

def __getValue__(self):

"""

DESCRIBE HOW THE FUNCTION WORKS.

"""

# YOU ALWAYS HAVE TO IMPLEMENT A GET FUNCTION.

# DON'T FORGET YOU CAN DEFINE __getvalue_startup__ IF YOU NEED TO DO

# SOME OPERATION BEFORE THE ACUTAL MEASURE (IT IS AUTOMATICALLY CALLED,

# PLEASE *DON'T* CALL IT HERE.

# IF THE VALUE IS A FUNCTION OF THE VALUE OF THE SUB-PARAMETERS, YOU

# CAN CALL THEM EXPLICITLY HERE VIA:

# Parameter.parameters['name_of_subparameter'].getValue()

return value

def __setValue__(self, value, searchparams):

"""

DESCRIBE HOW THE FUNCTION WORKS.

"""

# YOU DON'T NECESSARILY NEED TO SPECIFY A SET FUNCTION. IF YOU DON'T

# SPECIFY HERE, A RECURSIVE FUNCTION WILL BE USED [TODO: is this true?]

# searchparams IS A DICTIONARY CONTAINING ALL THE ADDITIONAL

# INFORMATIONS YOUR ALGORITHM NEEDS, SUCH AS TOLERANCE, INCREMENT STEP

# SIZE, ETC. AN EXAMPLE IS THE FOLLOWING:

# searchparams = {

# 'tolerance_style' : '%',

# 'template' : {'tolerance' : 20,

# 'max' : 10,

# 'min' : 0,

# 'step' : 1,

# 'step_type' : 'linear',

# }

# 'subparam1' : {'tolerance' : 10,

# 'max' : 4,

# 'min' : 3,

# 'step' : 0.1,

# 'step_type' : 'exponential',

# }

# 'subparam2' : {'tolerance' : 10,

# }

# }

# PLEASE NOTICE: DON'T FORGET TO DEFINE A FUNCTION THAT LOOKS WHEITHER

# THE SEARCHPARAMS HAVE BEEN DEFINED AND PUTS DEFAULT VALUES

# INSTEAD.

return value

def __get_method__(self): # PLEASE REPLACE method WITH THE

# name_of_the_method

'''

DESCRIBE HOW THE METHOD WORKS.

'''

# IMPLEMENT YOUR OWN METHOD HERE. FOR EXAMPLE, YOU MAY HAVE TWO

# DIFFERENT WAYS TO MEASURE YOUR PARAMETER, ONE USING THE SCOPE ONE

# USING SPIKING DATA. YOU CAN THEN DEFINE __get_scope__ AND

# __get_spiking__. YOU CAN DEFINE AS MANY METHOD AS YOU WANT AND THEN

# CALL THEM IN YOUR SCRIPT BY:

# getValue(method='your_method_here')

return value

def __set_method__(self, value, searchparams): # PLEASE REPLACE method WITH THE

# name_of_the_method

'''

DESCRIBE HOW THE METHOD WORKS.

'''

# IMPLEMENT YOUR METHOD HERE. READ __get_method__ COMMENTS TO KNOW MORE

# ABOUT METHODS.

return value

def __get_startup__(self):

"""

"""

# THIS FUNCTION IS CALLED AT EVERY getValue CALL AND IT IS USED TO

# APPLY PRELIMINARY SETTING IF NEEDED, E.G. SET ALL LEARNING SYNAPSES

# TO HIGH IF YOU WANT TO MEASURE THEIR EFFICACY.

return

def __set_startup__(self):

"""

"""

# THIS FUNCTION IS CALLED AT EVERY setValue CALL AND IT IS USED TO

# APPLY PRELIMINARY SETTING IF NEEDED

return

The xml file template¶

<?xml version="1.0" ?>

<parameter paramid='example_parameter' class='ExampleParameter'>

<MAX>inf</MAX>

<MIN>0</MIN>

<units>Hz</units>

<!--<method>linearSweep</method>-->

<deps>

<parameter paramid='efficacy' class='Efficacy'/>

<parameter paramid='injection_bias' class='Bias'/>

</deps>

</parameter>